Web scraping is the process of using bots to extract content and data from a website. when user requests on the server to access the website. Server response comes with HTML form. Web scraping basically manipulates this HTML data and gets the required data.

The theory is more than enough. Now it's time to experience it practically.

We use cheerio for this project. You should have knowledge about selector and dev chrome tools

Now let's create a server with Node.js and Express.js.

//index.js

const express = require('express');

const app = express();

const PORT = 5000;

app.listen(PORT, () => {

console.log(`server is running ${PORT}`);

})

Nothing new right? Now we are going to the next step. But Before that, let's clarify what is the goal that we want to achieve using web scraping.

We are going to use a movie URL and fetch some data of this movie and save it in CSV format.

Install cheerio, axios and json2csv.

We are going to use cheerio in our index.js file. We fetch data from this link

https://www.imdb.com/title/tt5354160/?ref_=nv_sr_srsg_0

(By the way, this is a masterpiece movie. You should watch it 😊).

index.js

const express = require('express');

const axios = require('axios');

const cheerio = require('cheerio');

const fs = require('fs')

const json2csv = require('json2csv').Parser;

const app = express();

//target link

const movieUrl = "https://www.imdb.com/title/tt5354160/?ref_=nv_sr_srsg_0";

//this function calls itself.

(async () => {

const data = [];

const response = await axios(movieUrl, {

//this headers important to pretend in real request

headers: {

"accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9",

"accept-encoding": "gzip, deflate, br",

"accept-language": "en-GB,en-US;q=0.9,en;q=0.8,bn;q=0.7"

}

})

let htmlBody = response.data;

let $ = cheerio.load(htmlBody);

let movieName = $(".khmuXj>h1").text();

let rating = $(".jGRxWM").text();

let trailer = $('.ipc-lockup-overlay.sc-5ea2f380-2.gdvnDB.hero-media__slate-overlay.ipc-focusable').attr('href');

data.push({

movieName, rating, trailer

})

const j2cp = new json2csv();

const csv = j2cp.parse(data);

fs.writeFileSync('./movieData.csv', csv, "utf-8")

})()

const PORT = 5000;

app.listen(PORT, () => {

console.log(`server is running ${PORT}`);

})

I will explain this code line by line. movieUrl is the target link.

After that, there is an asynchronous anonymous function that calls itself. If you have an idea in javascript IIFE, then you will already know this.

Then we get an empty array data. It helps when we convert our data in CSV format. Because json2csv demands a JSON data to convert in CSV.

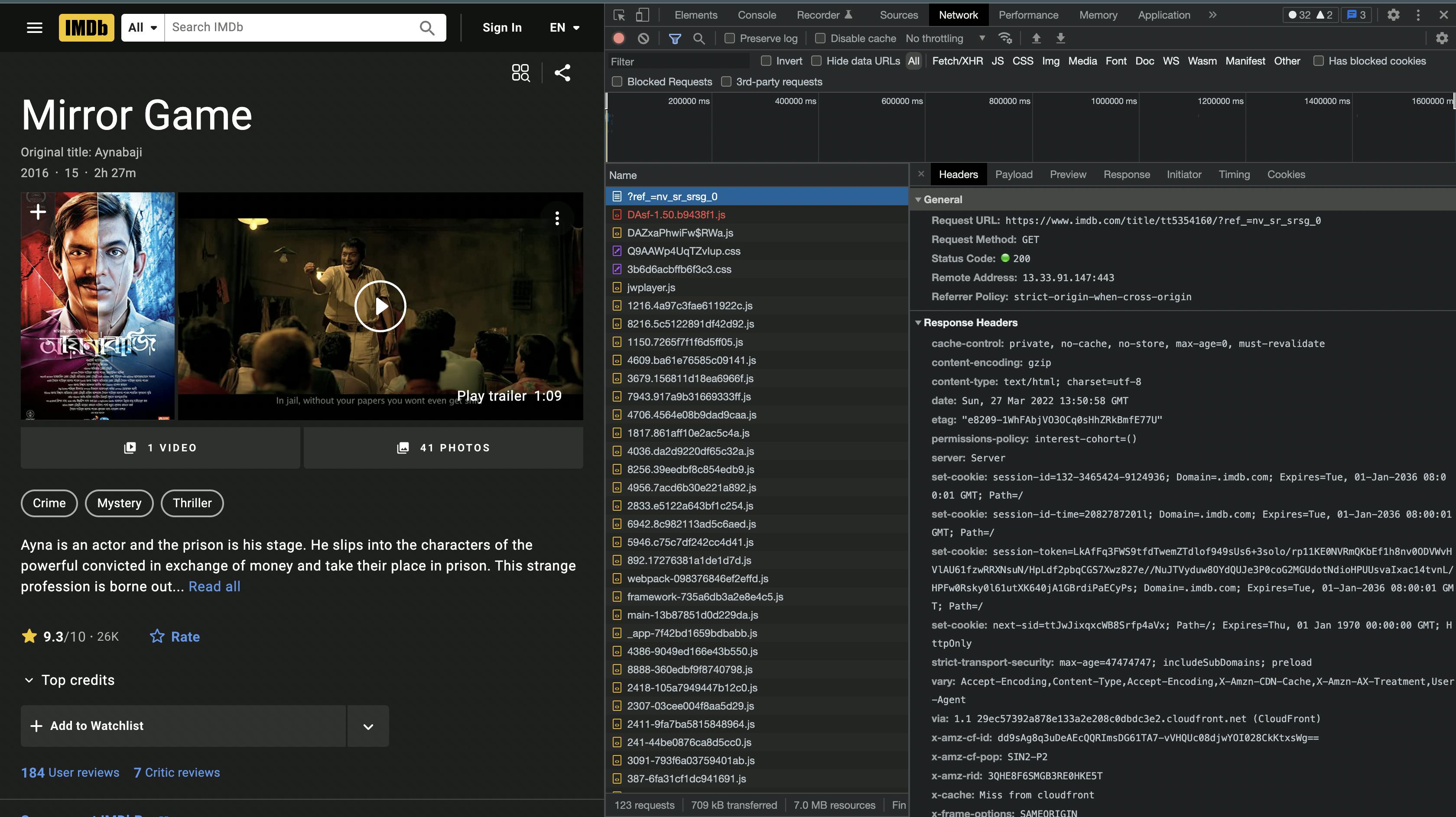

We create a axios.get request. But one thing must be noticed here. Where we get the headers attributes. We get this from the network tab of the browser. Just open your browser. Right-click on the browser, go to inspect. Then you find the network tab. Now hit our target URL. You can see many file names, statuses, and more information in the network tab. Go to the first one.

Now you find our headers information in the request headers of the network tab. Now, a question comes to your mind. Why should you use headers for a get request. When you want to fetch data from a website, there is a huge possibility this website not welcome you. Because this website is the owner of those data. right? So, If this website can understand, this API hit is not normal then they can block the data. In this case headers ensure the server, this API hit is coming from a normal browser. You can copy the whole request headers in your code.

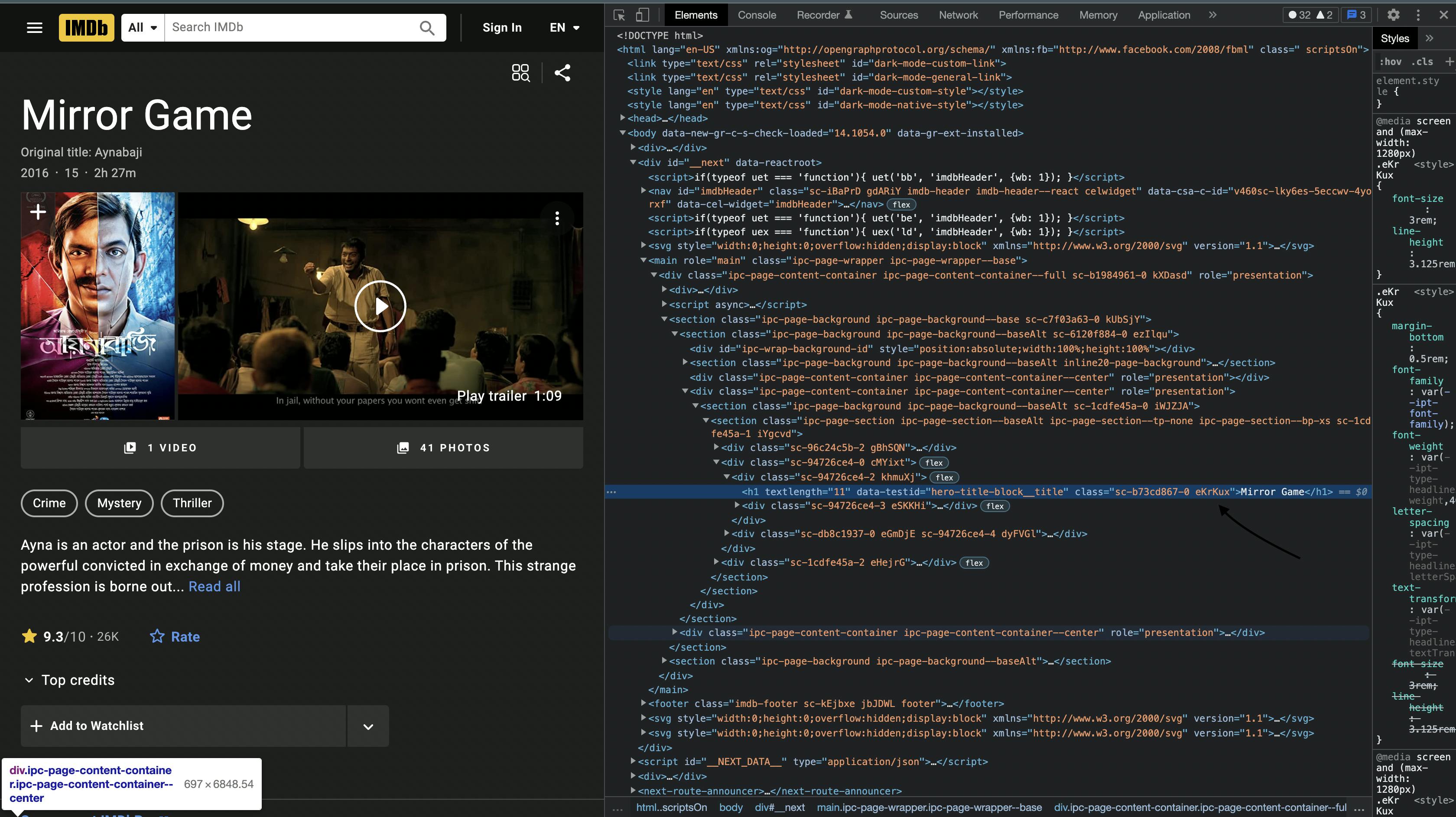

let $ = cheerio.load(htmlBody) is helping us to run the selector. Then go to the elements option to inspect your desired data and use it as selector. Here we get the movie name, rating, and trailer.

(Look at the arrow of this picture. You can get a class name like this)

let movieName = $(".khmuXj>h1").text();

let rating = $(".jGRxWM").text();

let trailer = $('.ipc-lockup-overlay.sc-5ea2f380-2.gdvnDB.hero-media__slate-overlay.ipc-focusable').attr('href');

Then data is pushed in the data array in this code.

data.push({

movieName, rating, trailer

})

and covert the data to CSV format using these codes.

const j2cp = new json2csv();

const csv = j2cp.parse(data);

Now, Create a file with the node.js fs module.

Run your index.js file and enjoy the output.

Note: This blog is only for learning purposes.

Github Repo: github.com/alaminsahed/web-scraping-to-csv

Enjoy your coding!